Why Outsight uses Lidar

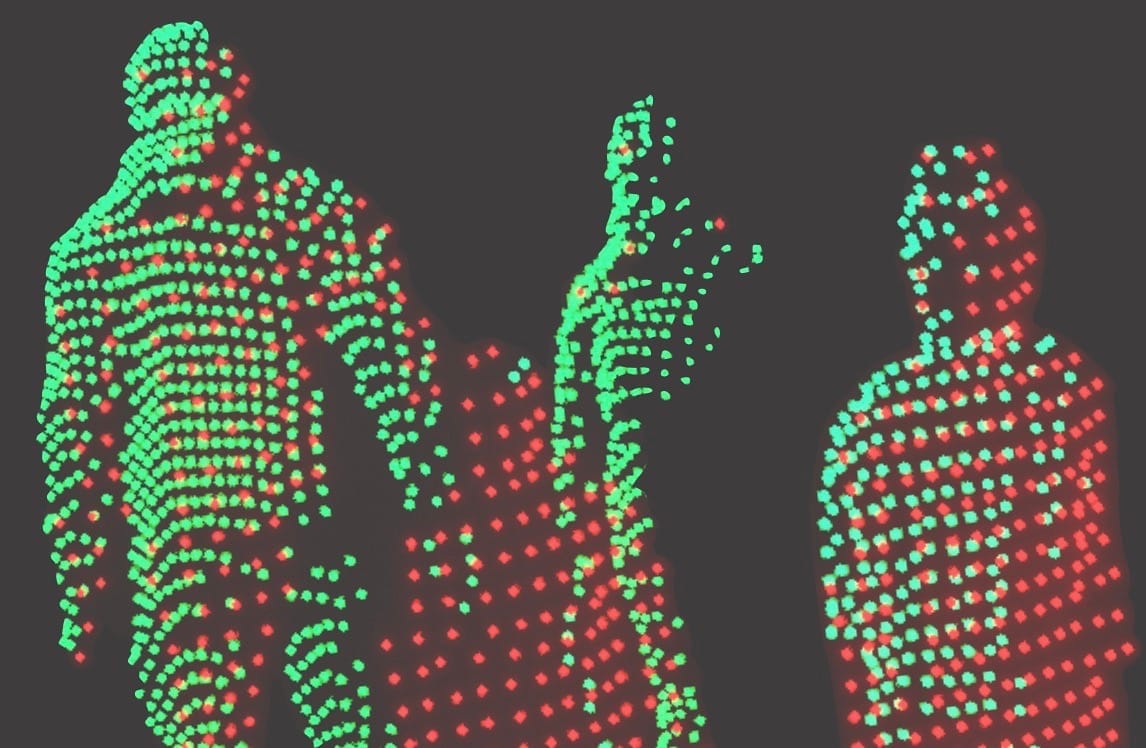

LiDAR combined with Spatial AI enables a fundamentally new capability for airports, delivering real-time, airport-wide understanding, powering Motional Digital Twins.

In his recent article "Why Xovis doesn't use Lidar" in International Airport Review, Xovis co-founder and CEO addressed the increasing market demand for LiDAR, comparing it with their in-house-developed stereo-vision sensors.

As pioneers, global leaders, and the most experienced team in LiDAR-enabled Spatial Intelligence solutions, we believe it is our responsibility to contribute to a healthy debate. One that, as you will see, is not truly about sensors.

First, I must say, as an entrepreneur myself, that I have great respect for what the Xovis team has accomplished—introducing new technology to a market is never easy.

Their success is all the more impressive because understanding the physical world is fundamentally a 3D problem. Seventeen years ago, true 3D sensors didn't exist, so the company cleverly built its solution around essentially a 2.5D approach, called stereo-vision (more on this below)—which remains their core technology today.

It's not about Hardware

Smartphones are not just better phones, nor the result of incremental feature improvements.

Of course, smartphones expanded messaging to include images, and voice calls improved with VoIP and high-fidelity codecs, but focusing on that misses the point.

The real breakthrough was the entirely new set of possibilities they unlocked.

Still, since the original article focuses on hardware and because real-time 3D data is a key enabler of an on-going powerful transformation for airports, it is worth bringing clarity to this aspect.

Real 3D changes everything

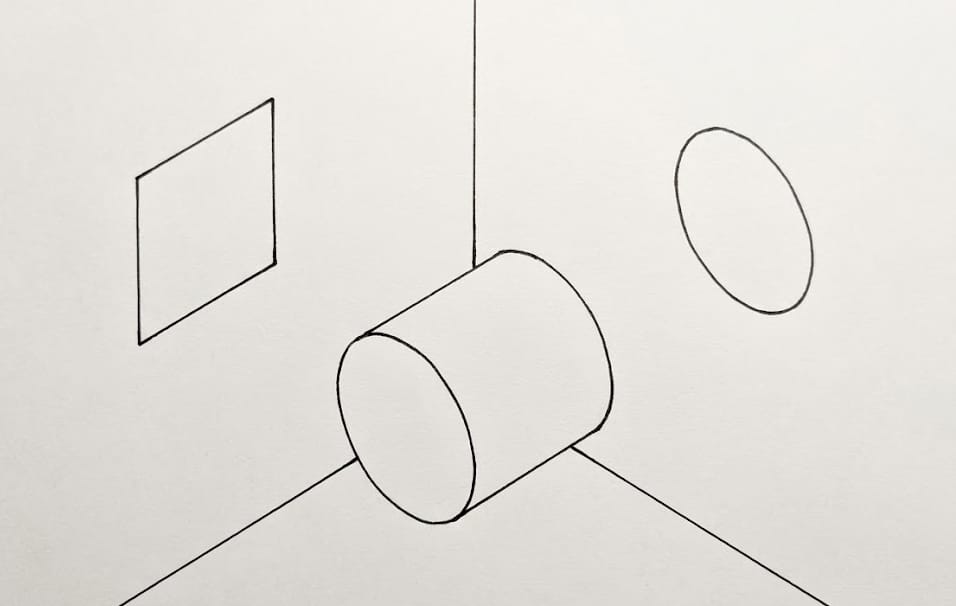

Stereo-vision uses two cameras separated by a fixed distance, called the baseline, and applies triangulation between their images to estimate depth, something a single camera cannot do.

Estimated Depth vs. True 3D Measurement

Because depth is inferred through triangulation rather than directly measured, stereo-vision accuracy varies with distance, meaning an adult’s head and a small child’s head or luggage will not be estimated with the same precision.

Only true 3D perception can capture the full physical reality of a scene.

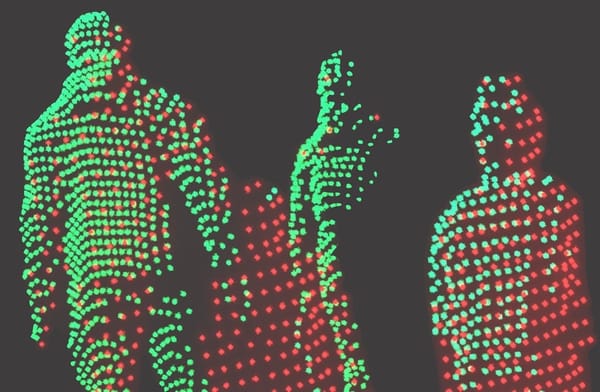

LiDAR works on a completely different principle: it emits millions of laser beams per second in multiple directions, often covering 360 degrees, to directly measure the distance to every object.

For more details, take a look at our article on this subject:

Flexible mounting

Because LiDAR captures the environment in true 3D across all directions, it does not require fixed ceiling mounting and can be installed at any angle on walls, poles, or other supports, including the ceiling.

Also, because LiDAR emits its own laser beams, it is an active sensor, unaffected by external lighting conditions, whether direct sunlight or complete darkness.

This mounting flexibility, unavailable with 2.5D cameras, is also crucial for understanding the next key difference.

Solving the occlusions' problem

With a 2.5D sensor, from a single viewpoint, (even worse with a 2D camera), occlusions are a major problem: an adult can easily hide a child from the camera's view. Ceiling mounting helps reduce this issue but does not solve it.

To understand this concept in more detail, take a look at our article:

While this capability is enabled by LiDAR's native 3D nature, it is the Spatial AI software, also known as Physical AI, that makes it a reality.

Leveraging Automotive Reliability and Scale

While LiDAR was originally developed for 3D mapping, the automotive industry, where sensor failure can be life-threatening, has driven it to meet extremely stringent reliability standards and certifications, a level that is not comparable to stereo-vision sensors primarily designed for approximate footfall counting in retail stores.

The industrialization required for production in millions of units is also incomparable to the respectable but much more modest airport people-counting market, driving Lidar sensor prices down to hundreds of dollars per unit for some models.

Optimized Cost and Number of Devices

The falling price of sensors is not the only factor making native 3D perception more cost efficient to deploy: the much longer detection range (over 200 meters in some models), the wider field of view (up to 360°), and the mounting flexibility all result in a significantly lower sensor count, reducing installation, wiring, networking, processing, and maintenance costs.

We could continue listing many other advantages of true 3D perception and LiDAR, including the fact that no images are ever captured, eliminating (not just reducing) the risk to privacy. If you want to understand why the most advanced airports globally are deploying Outsight and Lidar, just send us a message.

But the main point is neither hardware nor software; it is a transformative capability that simply was not possible before.

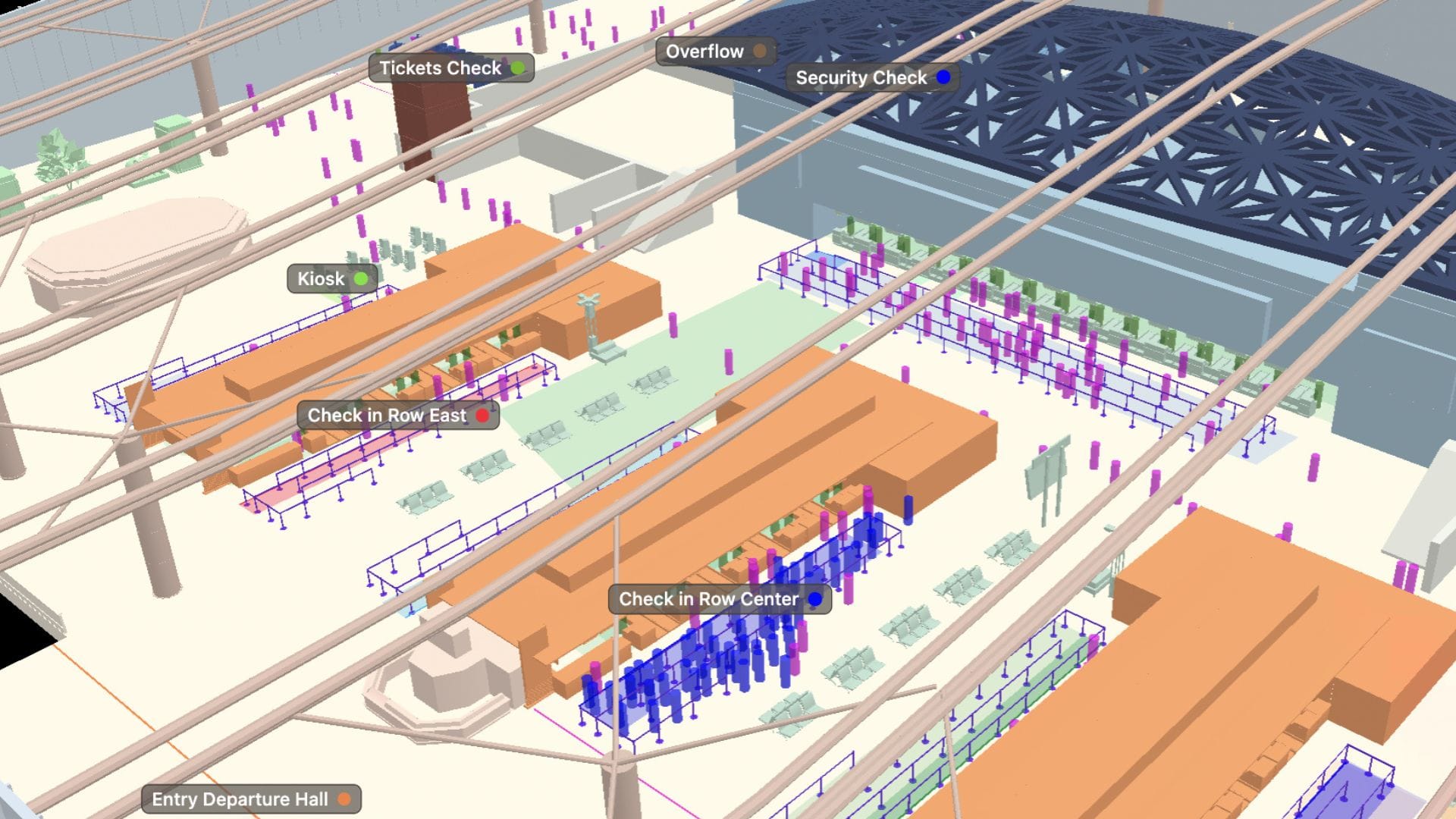

How 3D Spatial Intelligence powers Motional Digital Twins

The 3D data provided by LiDAR is only an enabler; the real value goes far beyond counting people in a specific area or calculating wait times, just as smartphones can send texts and make calls like older phones, but derive their true value from everything else they make possible.

Each individual is represented with a unique ID with an accurate real-time position, meaning that external data from other sensors, boarding pass scanners with flight data, and point-of-sale systems, can be attributed to each specific person, a transformative capability that was unthinkable before.

This means, for the first time, capturing, understanding, and predicting the Full Passenger Journey, unlocking unique insights that improve operations, enhance passenger experience, and increase safety.

This is called a Motional Digital Twin: a real-time replica of the physical airport focused not on buildings or equipment, but on the dynamic reality of people and objects moving, interacting, and using available resources.

To learn more, take a look at our whitepaper describing this in more detail, as well as explaining why this new kind of Digital Twin is the right foundation for the most advanced AI workflows:

In conclusion, LiDAR cannot be meaningfully compared to limited, siloed legacy technologies with little or no integration into airport systems. On the contrary, it becomes the cornerstone for connecting information across the airport around what truly matters: the passenger.

Some predictions

It is always difficult to predict what will change in the future, but much easier to identify what is unlikely to change, as history has repeatedly shown across technology adoption cycles.

- In general, superior, cheaper, and more reliable hardware always replaces legacy technologies, even when those older solutions were once considered sufficient.

- When the new hardware reaches maturity, software that delivers tangible benefits makes the difference.

- That transformation is rarely driven by experts in the older technology, but by specialized companies, unencumbered by legacy approaches, yet equipped with years of experience in the new technology, deep know-how, and enterprise-grade solutions.

We could say that airport operators will experience this transformation in the future, but that would not be accurate: It's not the future, is already happening.

While most deployments are not yet publicly communicated, if your airport is not yet working on leveraging the value of 3D Spatial Intelligence, we invite you to talk to your peers: the world’s most advanced international airports are already deploying, or preparing to deploy, LiDAR-based projects.