Understanding Occlusions-free 3D Perception

Unlike cameras or Stereo-vision, which perceive reality from a single point of view, 3D native data from LiDAR opens up new possibilities.

A significant advantage of 3D LiDAR perception over other optical sensors like cameras or people counting solutions is its ability to collect environmental information without any obstructions (i.e., Shadowless perception).

To better understand this concept, let's look at a typical scene in a crowded environment with four individuals.

These images show what different cameras in various positions would see from the scene:

Under these conditions, even the most advanced computer vision algorithms for people counting or object tracking will struggle to detect the hidden individuals and consistently follow them over time.

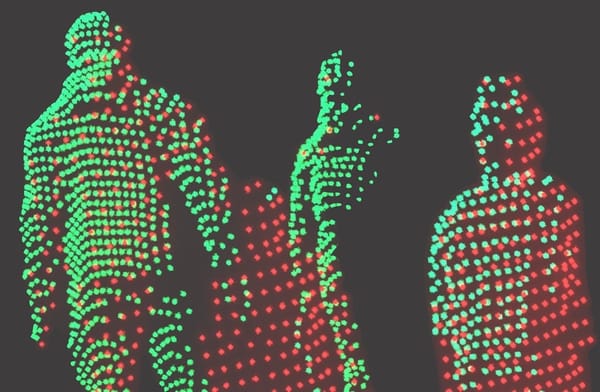

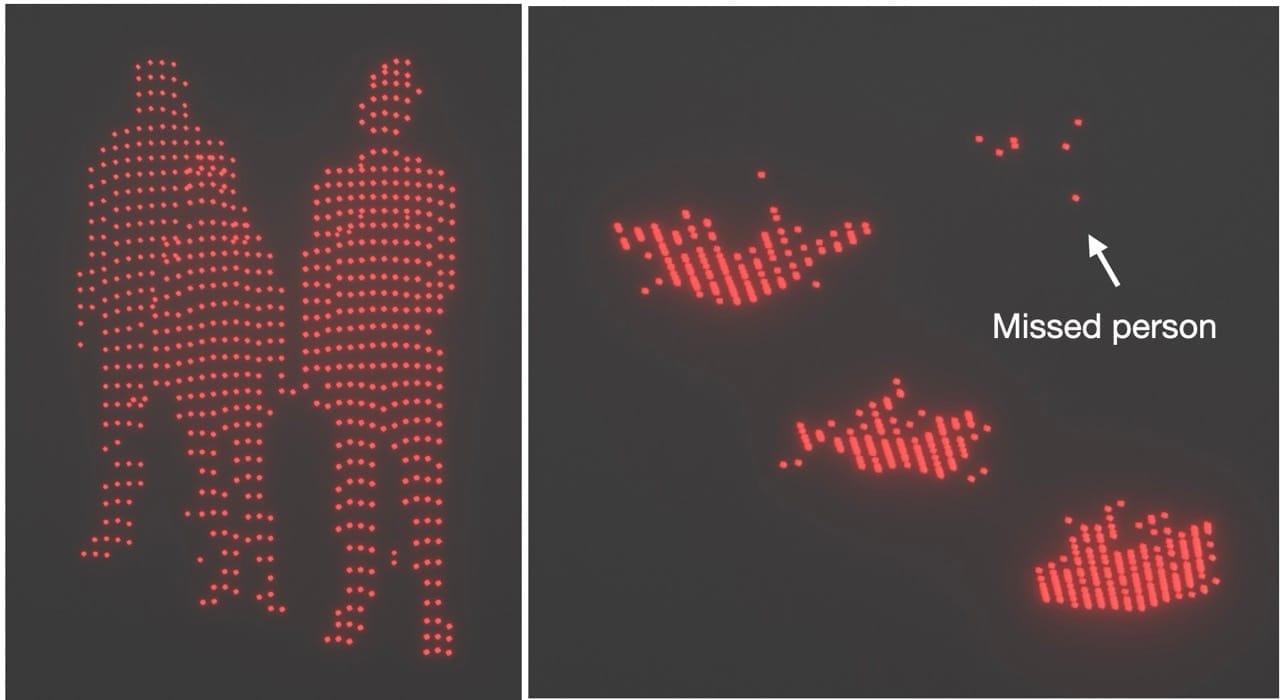

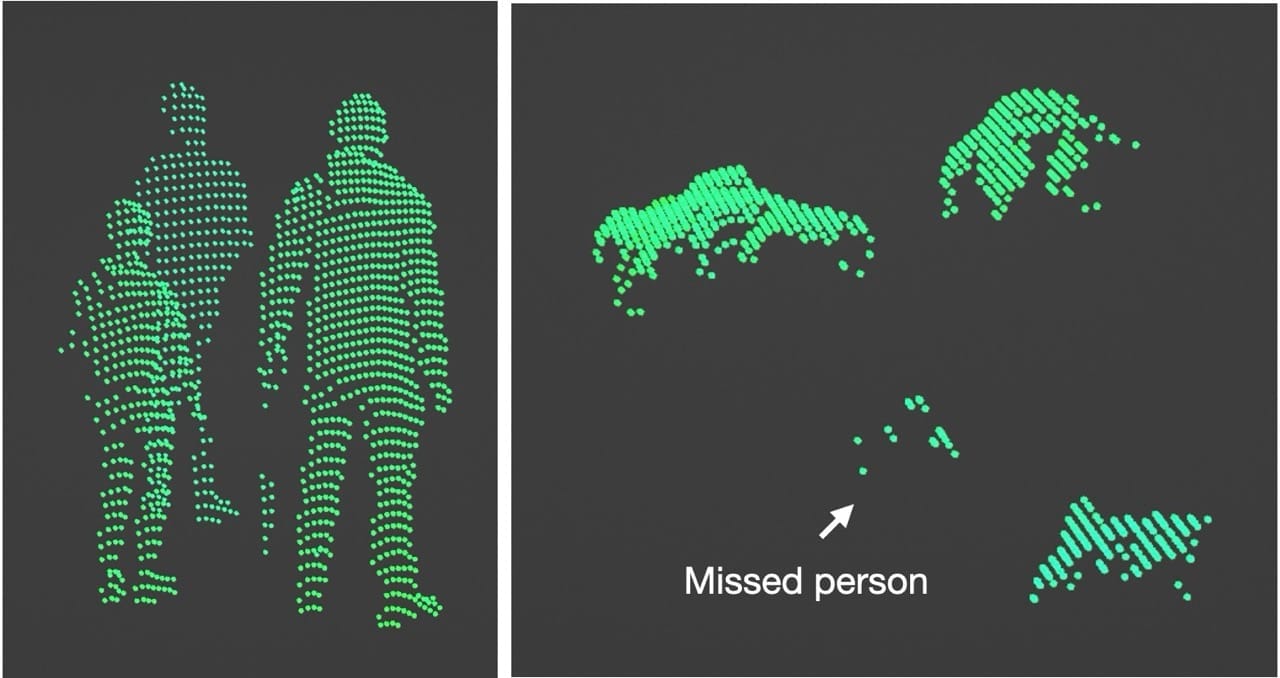

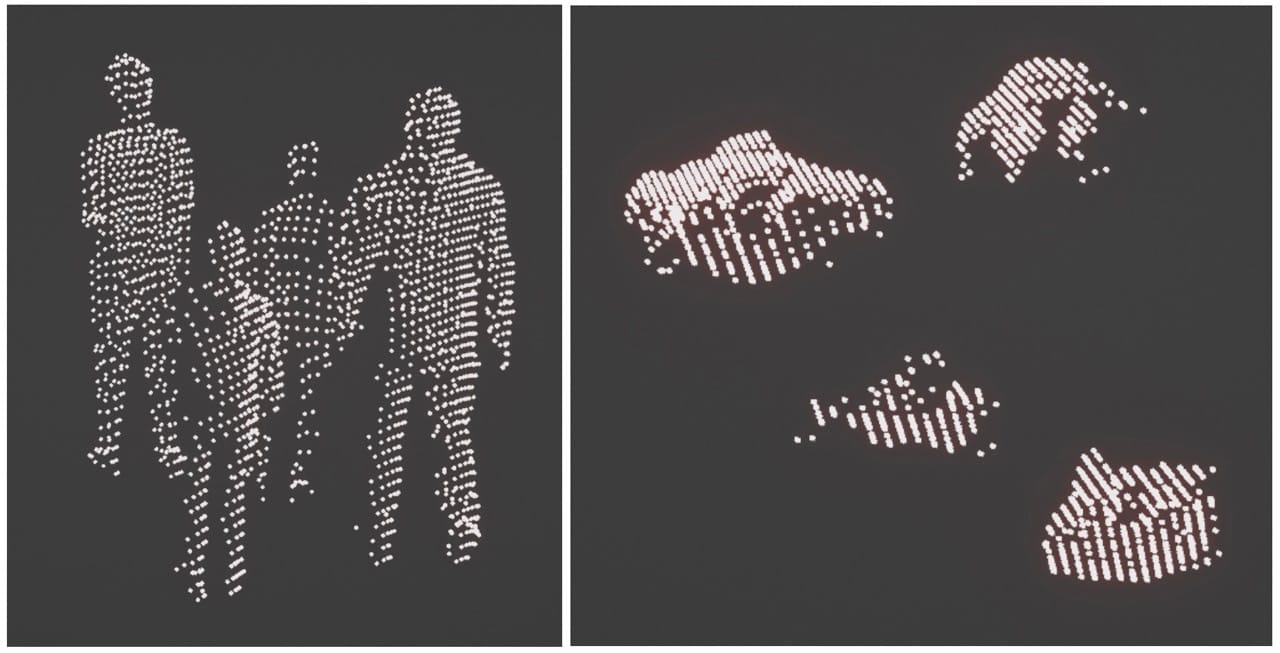

Now, let's view the same scene through a LiDAR sensor. With 3D vision, LiDAR accurately determines the position and size of each person.

Different LiDAR positions will miss different objects:

So, if LiDAR has the same limitations as cameras in these situations, why discuss LiDAR at all?

The answer is that 3D perception changes everything.

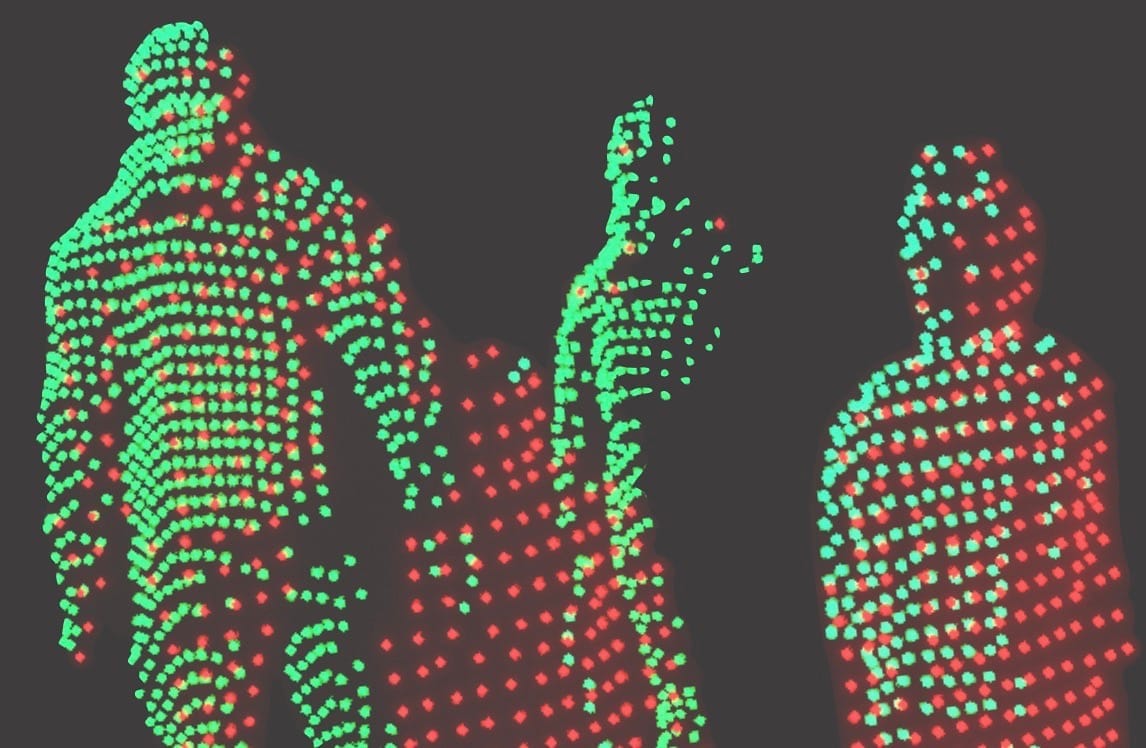

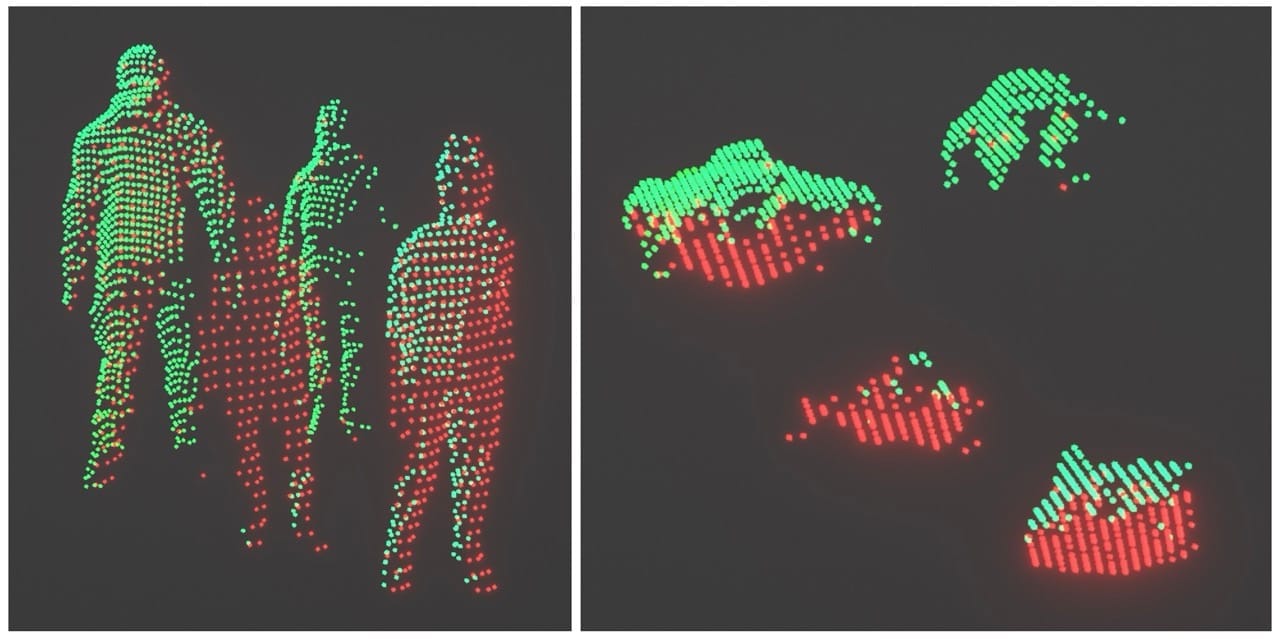

Because laser pulses are natively positioned in a 3D coordinate system (unlike camera images), advanced fusion software like Outsight's Shift Perception can seamlessly merge the data from each sensor into a global 3D point cloud:

As a result, an advanced Spatial AI solution like Outsight's Shift Analytics will leverage a unique kind of LiDAR sensor data, the equivalent of a virtual 3D sensor without occlusions, offering shadowless perception:

As shown in the image, 1 +1 = 3. The resulting perception is much better than the separate perception of the first sensor and the second one, thanks to spatial consistency.

The challenges of using Shadowless Perception

Calibration

To perfectly merge the data from different LiDAR sensors, it's important to use appropriate software, tools and methods.

If the LiDARs are not correctly aligned on the same 3D coordinate system, they can easily create phantom points: the same points seen by different LiDARs can be interpreted as belonging to different objects in the physical scene.

Calibrating a few LiDARs is much simpler than calibrating hundreds, a task our solution regularly handle for our customers in airports, train stations, and factories, to anonymously follow the movement of thousands of people in crowded environments, in real-time.

The challenge of Synchronization

When merging multiple point clouds from different LiDARs, a significant challenge is the lack of precise temporal synchronization.

Learn more about what's lidar and how it works

This means they can't observe all objects simultaneously. For example, a person scanned by one LiDAR at time t will be scanned by another LiDAR at time t + t1, during which the person may have moved and changed posture. This results in a blurred and imprecise fusion of data.

The challenge of creating accurate Analytics

Any Analytics software or Company that doesn't provide an appropriate Fusion & Processing capabilities able to Merge, Calibrate and Synchronise many LiDAR sensors precisely will struggle to provide correct metrics.

The benefits of occlusion-free perception

The advantages of shadowless perception translates in several concrete benefits:

- Accurate Data Fusion:

Merging data from multiple LiDAR sensors into a unified 3D point cloud ensures comprehensive scene understanding without phantom points. - Improved Analytics:

Shadowless perception eliminates occlusions, allowing for accurate detection of all objects in a scene, even in crowded environments. This accuracy is essential for high-quality data analytics.

- Enhanced Tracking:

Continuous and unobstructed tracking of moving objects becomes possible, improving the reliability of tracking results. - Scalability:

Advanced calibration and synchronization methods enable the integration of numerous sensors, making it scalable for large applications like airports, sports venues, and factories. - Less Sensors per Square Meter:

Without a good fusion solution, more sensors per square meter are needed. Shadowless perception reduces the number of required sensors. - Robustness:

Each sensor feeds into a common pool of information, so a hardware malfunction of a single device decreases only the number of available points but does not become a single point of failure. - Optimized Use of Different LiDAR Technologies:

Different LiDAR manufacturers and models create different scanning patterns. A shadowless perception solution like Outsight's leverages the best of each technology, creating a full 3D virtual sensor that surpasses the capabilities of each device separately.