How to use a 3D Preprocessor into Your ITS Solution

For ITS systems, using a 3D LiDAR Software for processing offers countless ways to retrieve actionable data and therefore create unique solutions.

LiDAR opens up many opportunities in ITS applications, including Smart Intersections, detecting VRUs (Vulnerable Road Users), Near-misses events and increasing the overall safety of both vehicles and pedestrians.

However, the adoption of LiDAR in these contexts is limited by its complexity.

From the perspective of the ITS applications market, one of the greatest advantages of Outsight's processing software is that even if an input has massive amounts of 3D raw data, when processed at the Edge, alongside the sensors, it becomes a Narrow-band data (or requires a narrow bandwidth to be transmitted), enabling, for example, low-power wireless communication.

As a first step, we will understand what a 3D Software Preprocessor is and how to use it.

3D Software Preprocessor

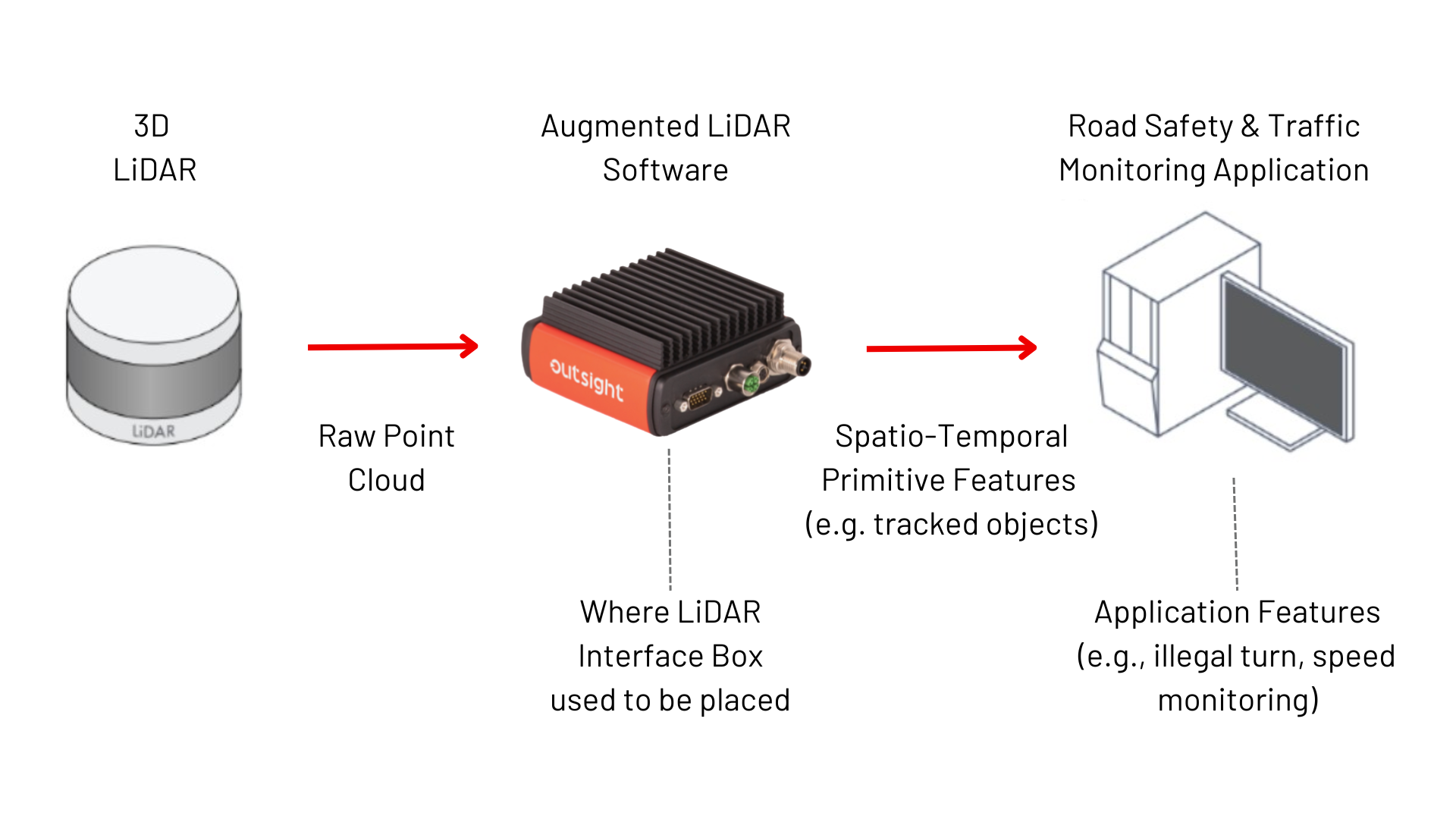

When it comes to using a 3D preprocessor, LiDAR preprocessing software is a new participant in the 3D real-time value chain.

As its name indicates, a preprocessor doesn’t replace the application-specific software, but facilitates and accelerates its development, providing an easy way to use LiDAR in any application.

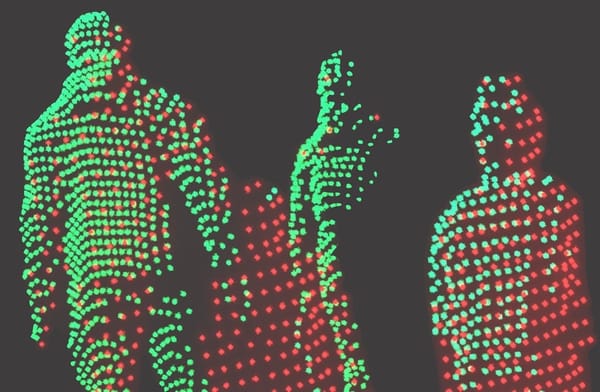

Simply put, Outsight's preprocessor helps LiDAR sensors localize, track, and classify objects, transforming the complex RAW Data from LiDAR into actionable insight that can be leveraged by ITS application developers in real time.

In the context of a Road Safety and Traffic Monitoring application software, the raw data from LiDAR can be transformed into useful insights, including information on illegal turns, speed and distance between vehicles, the presence of pedestrians in or outside of a crosswalk, the quantity and type of vehicles passing by a toll system, and many others.

This is done via real-time processing through Outsight’s augmented LiDAR software, which is embedded in a convenient plug & play Edge device, the Augmented LiDAR Box (or ALB).

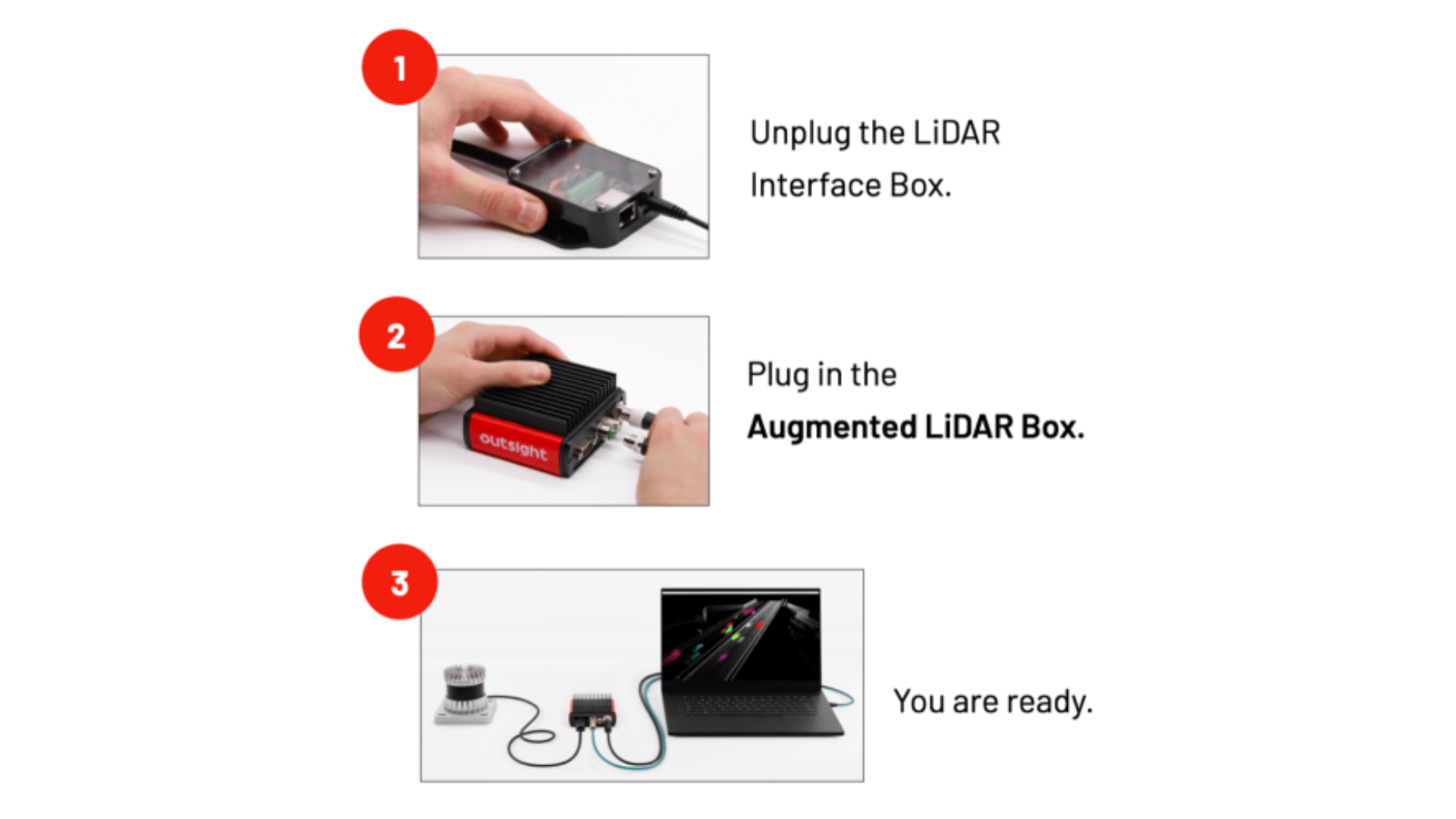

As we can see below, the ALB replaces LiDAR’s interface Box:

The 3D preprocessor setup will be conditioned on the number of LiDARs that are required to enable your application. While a smart tolling system might need only one LiDAR, a traffic monitoring system might need a dozen or more LiDARs to guarantee its safety standards.

In the next section, we will explain how to use a 3D preprocessor in multiple setups.

Single LiDAR setup

For single LiDAR setups, all you have to do is to disconnect the LiDAR Interface Box from your LiDAR, plug in the Augmented LiDAR Box (ALB) and connect it to the host computer, as can be seen in the following three steps.

Multi-LiDAR setup

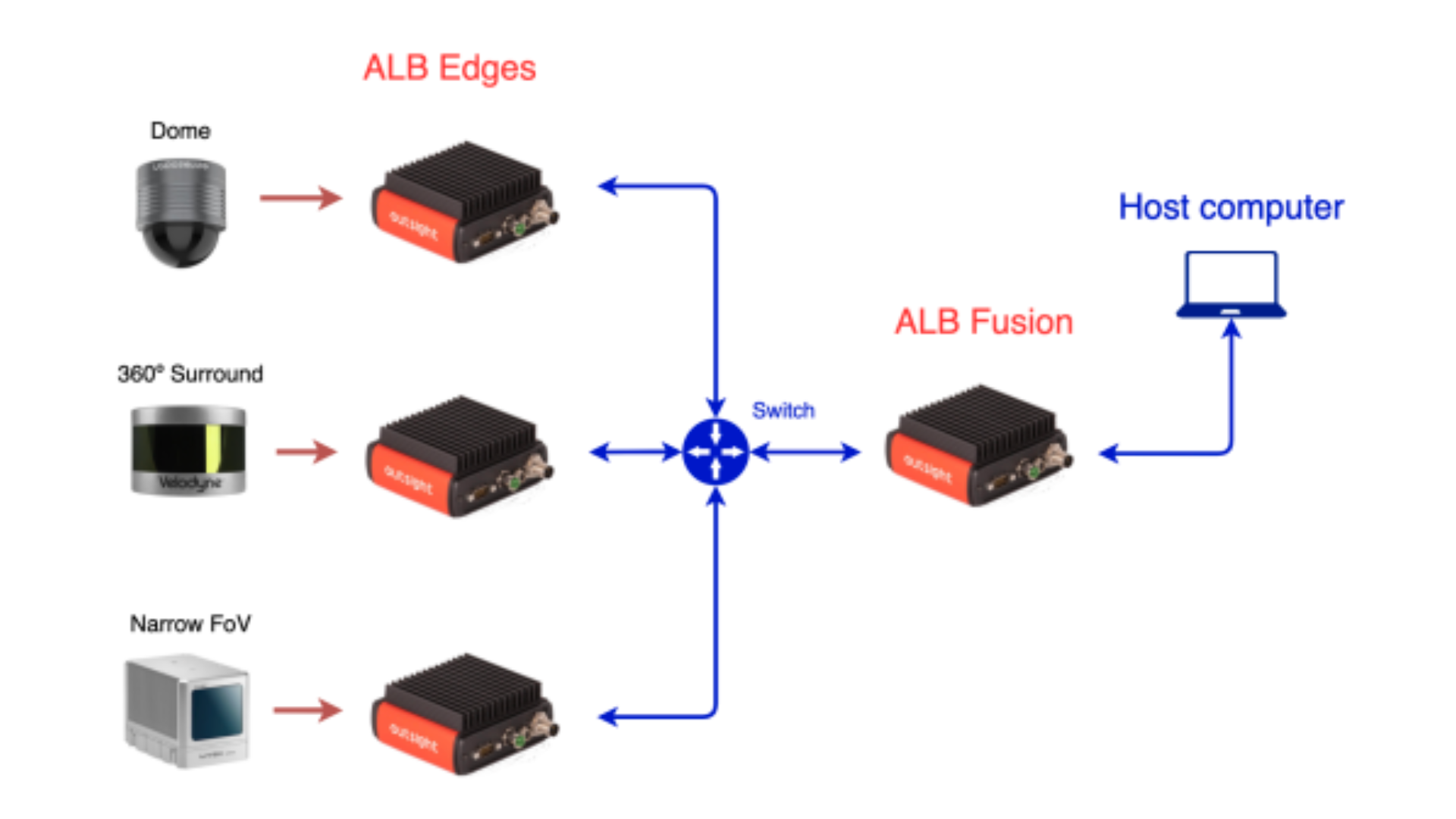

Multi-LiDAR setups are useful for situations where having multiple sensors allows coverage of wider areas while also minimizing shadows and blind spots created by static or moving objects.

In those cases, the setup is made with an additional Augmented LiDAR Box named Fusion, that can be used to merge the data from each sensor and create a super-sensor from the point of view of the application software.

Our real-time preprocessor abstracts the application software from this complexity (the combination of these LiDARs is seen as SuperLIDAR) and provides a unified and standard output format regardless of the input, without additional integration complexity.

This is an advanced task because our preprocessor takes care of both the synchronization and the calibration (position and orientation of each LiDAR with respect to each other).

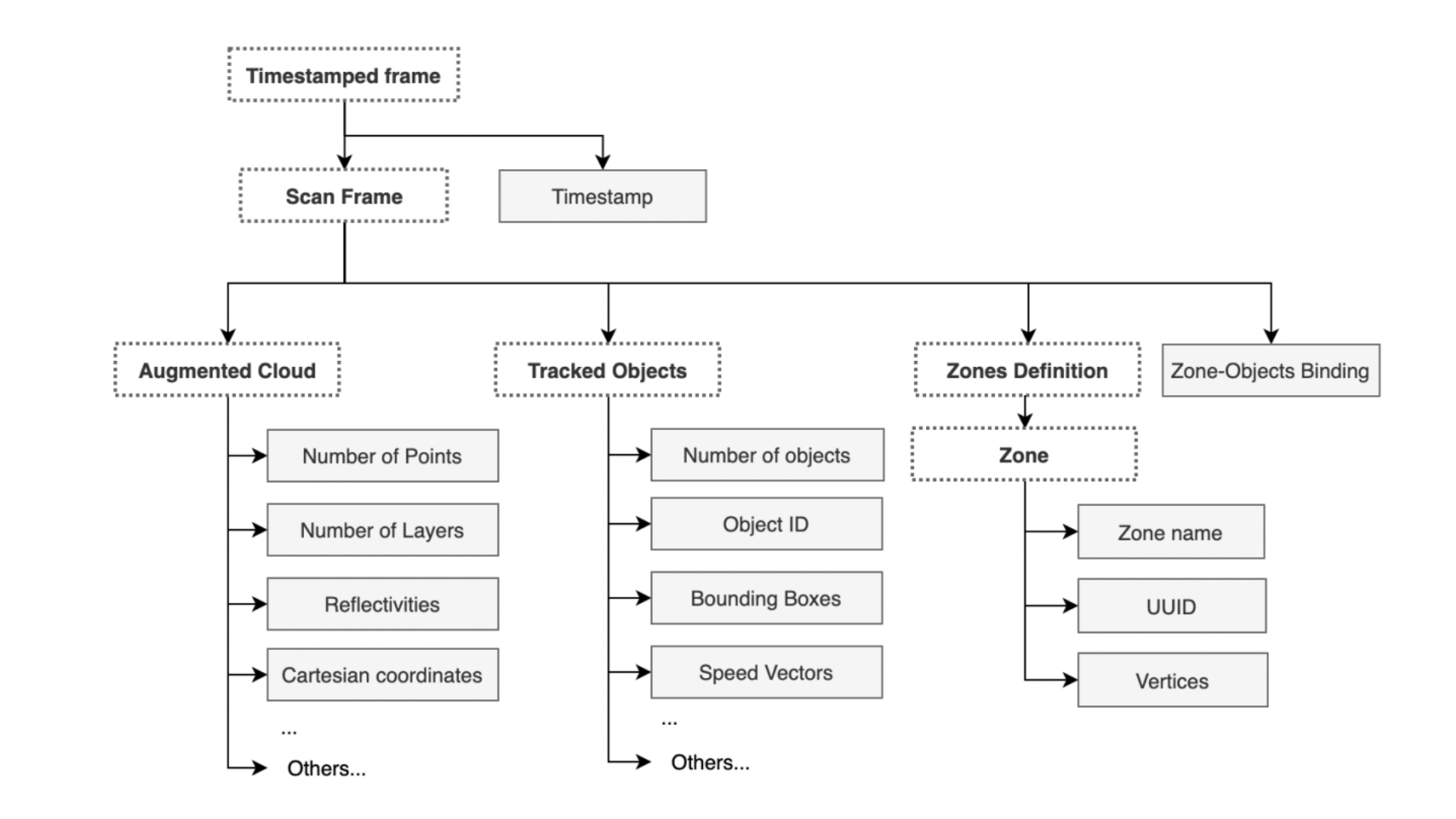

The ALB Fusion provides a standard open format data stream that is called OSEF (Open Serialization Format): it's a serialization binary format, based on TLV-encoding, that has a tree structure.

There is a single root 'Timestamped frame' containing the timestamp information of the processed frame. Under this root, you can find a sub-tree Scan Frame containing all the Augmented Data generated for that frame.

Now that we have discussed how to do single and multi-LiDAR setups, let’s explore its relevance to ITS use cases.

The building blocks for your ITS solution

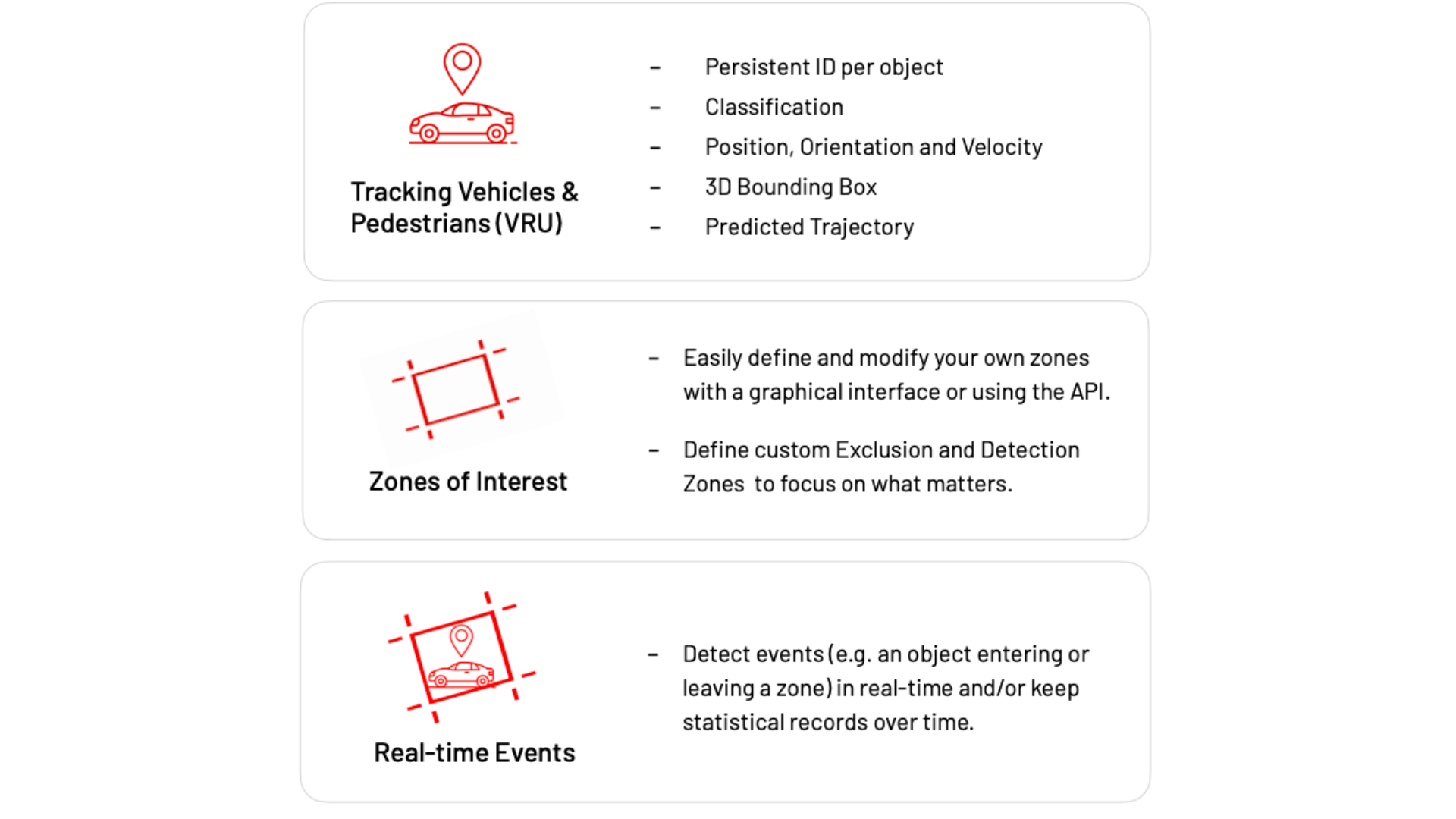

Among the many features that the Augmented LiDAR Box© provides for many applications, you can build almost any ITS use case by combining just three of them.

For example, in the case of a road safety application software, you can guarantee crosswalk pedestrian safety by combining two feature blocks: tracking vehicles and pedestrians (VRU) and zones of interest.

In another example, if you want to check if a vehicle is parked in an illegal zone for a certain period of time, then you need the three blocks of features.

The next illustrations summarize what’s inside each block of features:

From these examples, we can see that there are many important insights that a real-time LiDAR preprocessor can provide.

Once the scope and features to be used in the ITS application are decided, the next step is to finish its setup. The next section will show you how to do that.

Application setup and use case examples

Both live data from a LiDAR sensor and recordings in.pcap format can be processed by the ALB in real time.

Using the provided OSEF parser code sample below, the application code can read either an OSEF recording or the real-time output of the ALB in OSEF format from your favorite computing platform or framework.

import osef # Import osef lib

ALB_IP = "192.168.2.2" # Define ALB IP address

for frame_index, frame_dict in enumerate(osef.parser.parse(f"tcp://{ALB_IP}")):

# Extract data from scan frame

tracked_objects = frame_dict["timestamped_data"]["scan_frame"]["tracked_objects"]

object_ids = tracked_objects["object_id"]

class_names = tracked_objects["class_id_array"]["class_name"]

# Use Frame's data

# Declare the categories you want to take into account

VEHICLE_NAMES = ["TRUCK", "BUS",]

for frame_index, frame_dict in enumerate(osef.parser.parse(f"tcp://{ALB_IP}")):

tracked _objects = frame_dict["timestamped_data"]["scan_frame"]["tracked_objects"]

object_ids = tracked_objects|"object_id"]

class_names = tracked_objects["class_id_array"]["class_name"]

for object_index, class _name, bbox in zip(

object_ids,

class_names,

tracked _objects["bbox_sizes"].tolist(),

):

# skip if the object is not classified as a vehicle of interest

if class_name not in VEHICLE_NAMES:

continue

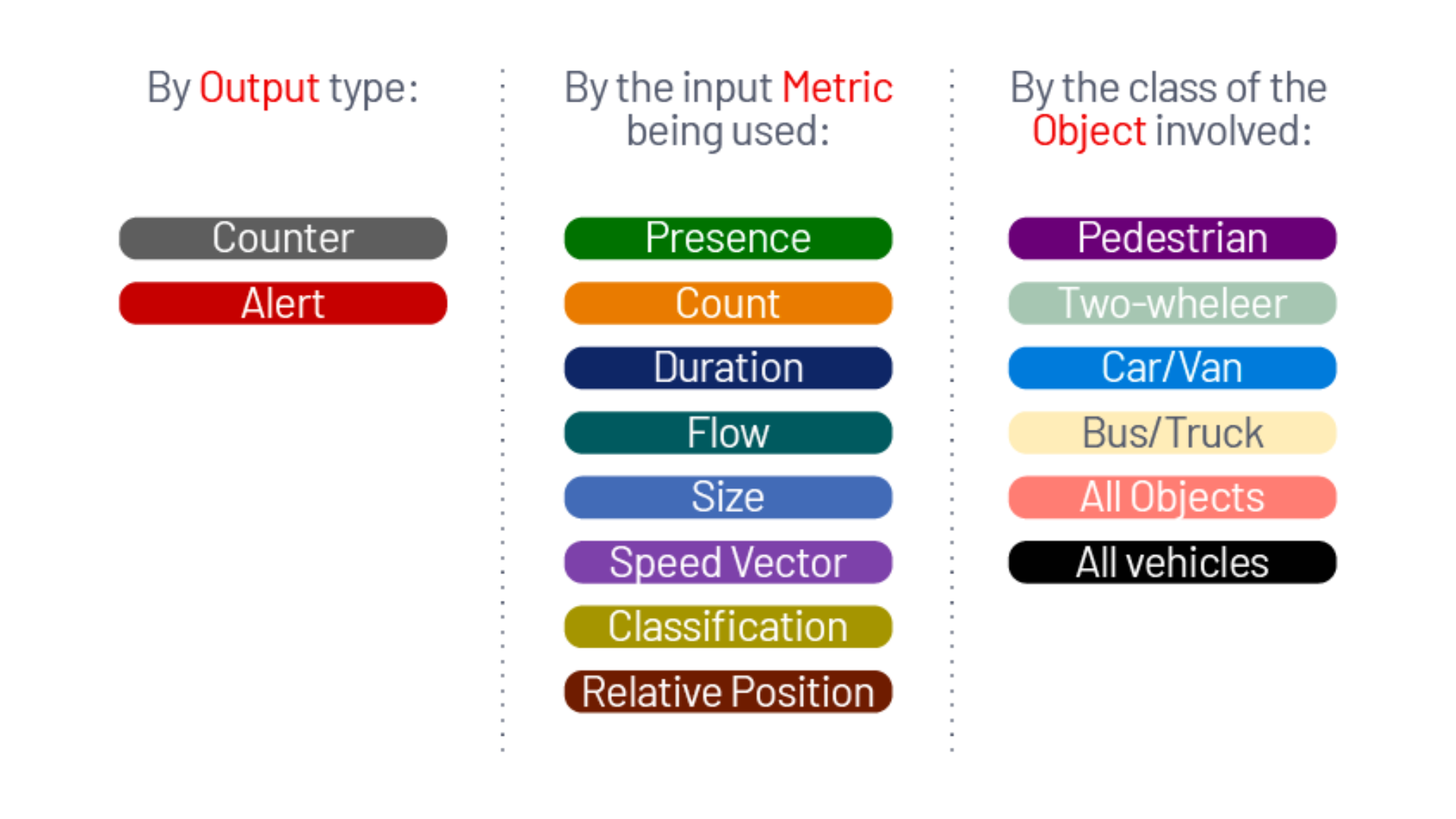

Once you have parsed and cleaned OSEF data, it’s time to build your application. This application can generate an alert or a statistic based on the data processed, and you can use many different metrics, available in OSEF, to generate the output, such as Count or Object Classification (for which many classes will be available in the data through our software).

The table below shows an overview of all the combinations available for your ITS application.

Next, we will see some Use Case recipes (simple code snippets), so one can easily and quickly build its complete ITS solution.

To see the recipes for other Use Cases, check out Outsight’s ITS LiDAR CookBook, where we provide dozens of them.

To see in practice how all the steps described until now work in practice as well as the configurations available during the setup, watch this next video:

Conclusion

From flow monitoring to illegal parking alerts and class-wise automated tolling systems, the potential of LiDAR in ITS involves endless possibilities once it is combined with the right 3D Preprocessor Software.

The Outsight 3D Preprocessor Software's capacity to convert large amounts of 3D raw data into narrow band data that is easy to understand and manipulate enables ITS developers to access a wide range of actionable data and insights, unlocking many new LiDAR ITS applications.

If you are curious to find out how to apply other simple code snippets (recipes) to the output of Outsight’s software and achieve precise and valuable results for ITS use cases, click here to read our latest version of the ITS LiDAR Cookbook.